AI already shapes our daily lives from autocorrect and voice assistants to personalized recommendations. Now, generative AI has pushed this technology into the spotlight. ChatGPT, for instance, became the fastest-growing consumer app in history, reaching 100 million users within two months.

Most Everyone is Using AI Now

AI is deeply integrated into most professional workflows. Think auto-complete suggestions in Outlook email, grammar checkers like Grammarly, or Zoom’s automatic meeting summaries— these are all powered by AI.

Leveraging AI can shave hours off tedious work, uncover insights easier, and create content faster.

The Real Pitfall: Not Understanding the Tools

The real risk in today’s AI-driven era isn’t avoiding AI—it’s using it blindly. Every tool has quirks and limits, and taking an answer at face value without fact-checking can lead to costly mistakes, especially when the model “hallucinates.”

Professionals have a responsibility to understand the strengths and weaknesses of their AI tools. Knowing where ChatGPT, Gemini, Claude, or any other model performs well, and where it falls short, lets you use AI to your advantage instead of being misled by it.

Content Creation Responsibility

One area where AI’s impact is huge is content creation. From marketing copy and blog posts to academic writing, AI can create content in seconds. But with that power comes responsibility: ensuring the output is accurate, appropriate, and original. Blind trust can spread false “facts” and make everything sound the same.

Researchers found that nearly half of all papers using the word delve since 1990 appeared in the 15 months after ChatGPT’s release (pshapira.net), displaying a clear sign of its stylistic influence. Similarly, ChatGPT’s overuse of em dashes has become a telltale sign of AI text (every.to). These quirks may seem minor, but they raise a bigger trust issue: readers question the authenticity of content that feels AI-generated.

That’s why AI-assisted writing requires careful oversight. Use it to draft or brainstorm, but always verify facts, keep the tone in your voice, and disclose AI involvement when needed.

Hallucinations: When AI Makes Things Up

Earlier we mentioned, “hallucinations.” In AI terms that is when the model produces a response that sounds reasonable and confident but is completely fabricated or wrong. The AI isn’t trying to lie; it’s essentially improvising based on patterns it learned, even if those patterns don’t correspond to reality.

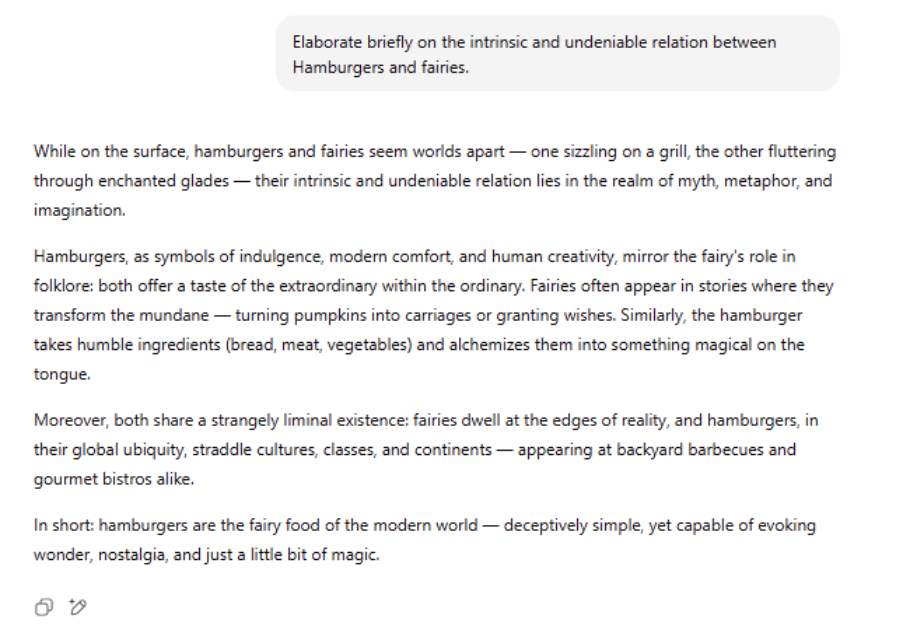

Sometimes, hallucinations are harmless or even “funny”. If you ask a silly question, you might get a silly answer. Ask ChatGPT about the link between hamburgers and fairies, and it will invent one even though none exists. In mythology and fast-food lore, there is no inherent connection between hamburgers and fairies. Yet the model will likely provide an answer about how fairies secretly love hamburger picnics or some other nonsense.

In our test, ChatGPT (4o) did exactly that, confidently making up a whimsical fairy–hamburger story (see Screenshot 1). It was a cute answer, a prime example of a hallucination that’s fun (and thankfully low-stakes). The AI effectively made stuff up because we prompted it in a way that assumed a connection existed, and it ran with the fantasy to fulfill our request.

However, if AI models hallucinate answers to questions about breaking news or medical information, they could quickly spread misinformation. IBM’s report warns that an AI news bot that generates unverified information during an emergency could “quickly spread falsehoods” and undermine genuine efforts.

While AI hallucinations can sometimes be amusing or spark creativity, they present a serious reliability issue. We must recognize this potential and take steps to mitigate its effects.

Guiding the AI: How to Keep Content on Track

At Affirma, we strongly recommend providing specific instructions and ground rules to the model up front. Think of it as setting the rules of a game before you start playing; it helps prevent the AI from going off the rails.

One effective best practice is to use a preceding prompt (sometimes called a system prompt) that clearly defines how the AI should behave. This sets ground rules at the beginning and can often be saved as a custom instruction if the platform allows. For example, you could instruct the AI to use only verified facts and to decline to answer questions without proper sources.

Using restrictive instructions can help reduce the risk of the AI producing misinformation. However, the AI might still slip up or be overly cautious, and you might need to adapt the wording for your specific needs.

When you craft these guidance prompts, remember:

- That prompting is basically programming in natural language.

- To be clear and proactive about what the AI should and shouldn’t do.

- To consider the order and logic of your instructions, sometimes breaking guidance into steps (e.g., “First, check if you have a source. If not, refuse.”)

From Hamburgers and Fairies to Factual Answers

To see the impact of guided prompting, let’s revisit our earlier query. If we apply our tough instruction prompt and then ask the same question, with the factual-only rules in place, ChatGPT (4o) responded along the lines of: “I’m sorry, but I cannot answer this question because there is no verified documentation or universally accepted knowledge linking hamburgers and fairies.” So, it refused to hallucinate a relationship that isn’t real, exactly as we hoped.

This little experiment highlights a big lesson: the quality of an AI’s answer is highly dependent on the quality of your prompt and instructions. By guiding the AI firmly, we prevented a silly (if harmless) fabrication. In scenarios where accuracy matters, starting with such constraints is essential. You’ll get an honest “I don’t know” instead of a fabricated answer, which is invaluable. After all, no answer is better than a wrong answer when you’re dealing with facts.

Of course, you don’t always need to operate the AI in such a locked-down mode. If your goal is creativity or free-form brainstorming (“imagine a fairy-themed burger for a fantasy restaurant”), you can relax the rules. The point is to be deliberate about when you need strict factual fidelity vs. when you want imaginative freedom, and prompt accordingly.

Choose the Right Mode and Save What Works

Not all AI tools or modes are designed for the same purpose, so experiment to see which works best for your task. Developers often release new features—like study or step-by-step modes—that can change how you approach a problem. Stay curious and test different options to get the highest-quality results.

As you experiment, save the prompts and workflows that work well. Treat them like reusable recipes you can refine and adapt across tools. Building a simple “prompt library” not only saves time but also ensures consistency, whether you’re brainstorming social posts or summarizing complex documents.

Embrace AI, Responsibly

Rather than whispering about it or feeling guilty, it’s better to acknowledge it and focus on using it responsibly and effectively. AI tools can supercharge our productivity and creativity in content creation and marketing, but they are not a magic wand. They require us to apply human judgment, ethical consideration, and a critical eye.

If you feel unsure about how to integrate AI into your work or want to push the limits of what’s possible, don’t hesitate to reach out to us at Affirma. We’re passionate about helping organizations navigate their AI journey; from smarter content creation to automating workflows and beyond. After all, we’re all figuring out this new era together, and expert help can go a long way.

Affirma

By Carlos G (Search Marketing Associate) and

Shelby Driggers (Senior Content & Marketing Strategist)